My family has a 12 volt projector that we take camping with us. It's a "

KickAss 12V Portable Outdoor Cinema Projector" and we have issues playing certain media types of USB flash drives because the projector often doesn't understand the audio codec (or sometimes even the video codec).

This is common with MKV files as they can have numerous audio codecs such as AC3, DTS, AAC and so on.

I noticed that certain MKV files would not play with audio on the projector due to no support for the Audio Format. If you open a file in VLC Media Player, you can press "CTRL + J" to view both the Video and Audio codecs being used as shown in the following screenshot.

I was able to work out quickly that it was A52 Audio (aka AC3) format that my projector didn't support.

Note: Many Smart TVs will also have issues with some codecs, this blog post will also help you with a TV, Projector or any smart device that you are looking to decode video / audio files.

Knowing its a codec issue, we needed to convert the video files to a format the projector could understand.

There are lots of tools on the Internet for converting codec's on video files however as I had a lot of videos, I wanted to automate this across numerous files.

I came across a free command-line based conversion tool called ffmpeg that you can download from the following link:

Being open source and cross platform, it stood out from most of the paid software on the Internet. This program also has a heavy community base behind it.

Like anything open source it comes with an awesome manual with all the syntax for the command line tool available

here.

To automate the conversion, move all MKV files (or another video format) to a folder by itself.

Create a batch script as follows and name it something like convertffmpeg.bat placing it in the same folder as your MKV files.

set avidemux="C:\ffmpeg-5.1.2-full_build\bin\ffmpeg.exe"

for %%f in (*.mkv) do %avidemux% -i "%%f" -c:v %videocodec% -c:a %audiocodec% -channel_layout "5.1" "converted %%f"

This batch script will does the following:

- Sets the location of ffmpeg.exe

- Tells ffmpeg.exe to keep the Video Codec "as is"

- Sets the Audio Codec to AAC format.

- Then for EACH MKV file type run ffmpeg.exe with the conversion.

- Name the converted files "converted + original filename.mkv"

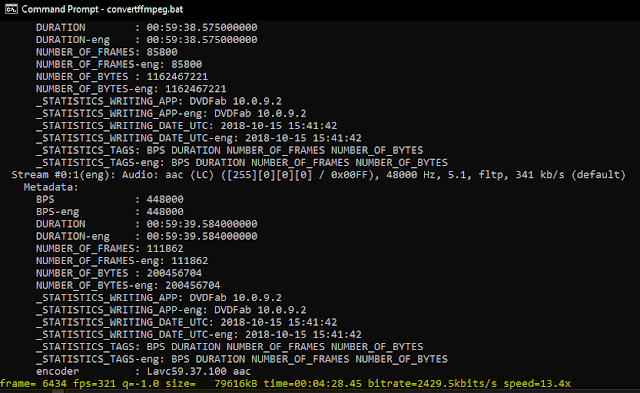

This script will automatically convert all files to the new format as shown in the following screenshot.

Note: In my example I converted the audio from AC3 format to AAC. During this conversion i had an issue with the 5.1 audio and VLC showed the channels as "ERROR". Also the mapping of audio channels were incorrect during the conversion (Center to Center, Right Front to Right Front, Left Front to Left Front etc). Telling ffmpeg.exe that it was a 5.1 channel audio with the channel_layout command fixed this issue. This article was also amazing at showing you what type of Audio Channel Manipulation you can do https://trac.ffmpeg.org/wiki/AudioChannelManipulation

I also tried setting audio codec to MP3 without the channel_layout set to 5.1, this fixed the audio problem but but MP3 is only stereo (to my understanding) so it would have stripped the additional channels of audio. You may not need to specify the channel_layout if your going to other formats, I would try it without first!

This was also useful in understanding what audio codec types can go with what video formats.

After the script has processed all MKV files in the directory we can see the audio format has changed to MPEG AAC format.

Anyway hopefully this blog post was helpful - a quick way to convert your video files to a supported format for your TV / Projector to understand.